Large corps and government entities usually dive into the innovation wave either out of obligation in the face of nonstop threats of new entrants or under the sole impulsion of a visionary leader. In all cases, the underlying objective, as surprisingly as it might seem, is rarely a business one.

Most of the time, the product (MVP) becomes the ultimate objective and the functional team starts piling up feature requirements on the tech team. Of course, nobody questions this way of working as it is the way it has always been done. Functional teams are the experts, they know the market, they know their customers and hence what features need to be developed. With some luck, this could actually work. However, most of the time it doesn't.

“The MVP has just those features considered sufficient for it to be of value to customers and allow for it to be shipped or sold to early adopters. Customer feedback will inform future development of the product.”

― Scott M. Graffius, Agile Scrum: Your Quick Start Guide with Step-by-Step Instructions

Defining Product Success

Lack of governing quantifiable business objective has several bad implications, for instance:

i) Prioritization becomes an intuitive hunch-based exercise. So, in order to reduce risk, functional teams would pile up the maximum number of features claiming that they all are critical for the product launch. This has 2 implications :

- Budget spending explodes with unclear ROI

- Worse, if the product launch is below expectations (which is quite likely), it is extremely complex to find out why, as the product already contains lots of features (what feature is incorrectly implemented or what part of the product is hurting conversion ratios becomes a Chinese brain teaser)

Having a quantifiable business objective greatly helps the prioritization exercise as the question becomes: "Let's set a score from 1 to 4 to these potential features as to which most helps our specific business objective". At Nimble Ways, we call that Definition of Success

ii) With no business objectives tied to the product, it is simply not possible to do any form of steering. The product is out there in the market, the accountability has been passed on to some other team in the organization and the question that stands is "Now, what?"

In this article, I will be addressing this question precisely "Now, what?", it is the same question phrased differently by startups "How do we scale?". In my experience, startups have been far better at this as, for them, it is a matter of life and death.

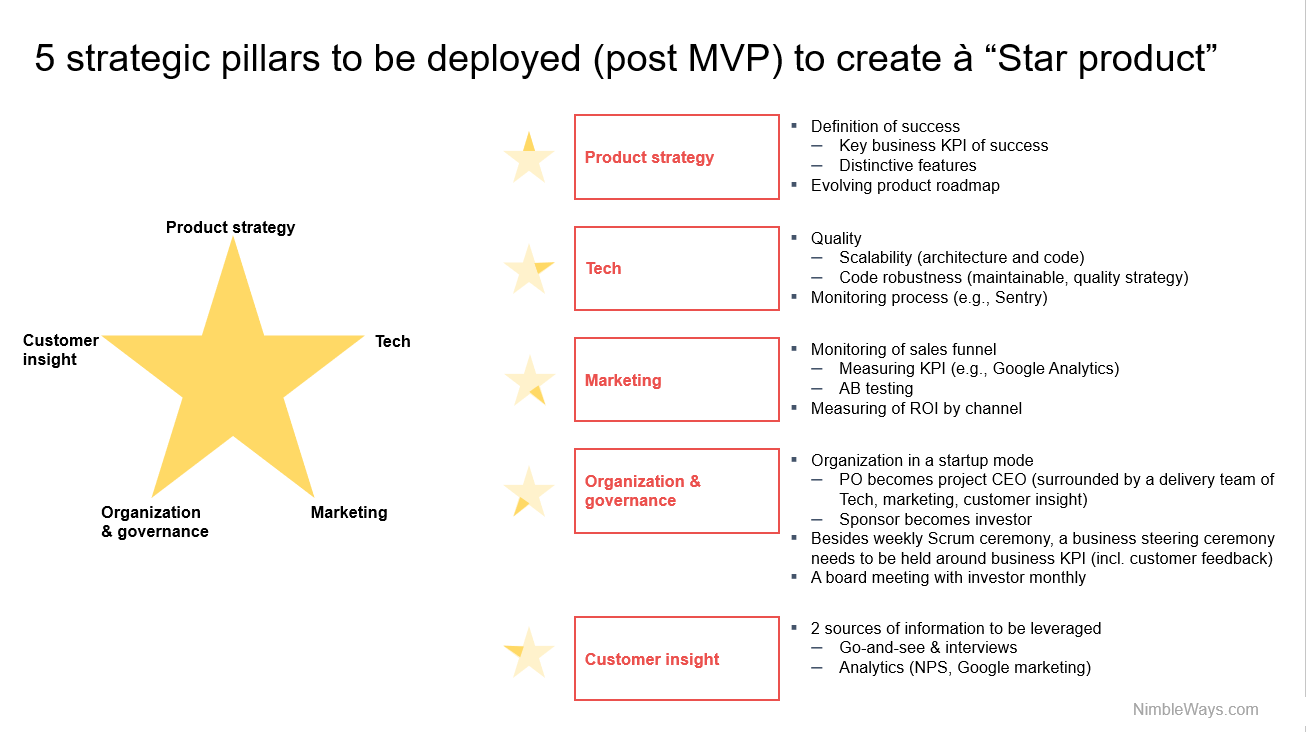

How to create a Star Product: the 5 things to get going

Let's now take one at a time.

I. Product strategy

Product strategy is key, it is at the core of the product's "why". It lays out the purpose the product is here to fulfill and the road to get there.

The first core component of the product strategy is the business objective, ideally quantitative. As mentioned above, it is key for prioritization and steering once the product is between the hands of users.

The second core component is the "How". What makes the product's purpose achievable i.e. the distinctive features. Those features are the competitive advantage of the startup who made the product. They need to be clearly defined and prioritized.

A good example of a product without distinctive features is Microsoft Zune. Came too late, no additional clear value. Died very quickly despite Microsoft's marketing war machine.

The third core element of strategy is the evolving roadmap. Probably here the word evolving carries more weight and importance than the word roadmap. And probably it is the main reason big corps have a lot to learn from startups.

Startups' youth and eagerness to learn from the market is probably one of their biggest strengths vs. big corps. Startups see the market as a lab for testing while big corps see it as a jury that will judge them. Startups have a true MVP / value based approach and then build up features, while big corps pile up features as they feel they're "supposed" to know (plus some other biases like showing-off in front of their competitors). The roadmap has to be evolving as knowledge is inferred from the market and the users.

Another typical example of a product roadmap that failed to evolve is Blockbuster. While Netflix emerged with DVD delivery by mail and early streaming services, Blockbuster didn't adapt its product strategy.

II. Tech

When it comes to technology, one thing is to be ensured above all: it must just work. And keep working over time. No matter how many users, no matter what country the users are in, no matter how many features are added per day. This article is not meant to explain in detail how to ensure product quality (because that's what it is about) from a tech perspective, but to give key items to consider when aiming at achieving it.

i) Scalability: this seems obvious but it goes beyond the architecture design. Of course, architecture must be designed to scale effortlessly (or almost). But the devil is in the code. One bit of code uses a hashmap that was not optimized for over 1000 entries and the whole app slows down. Users are long gone.

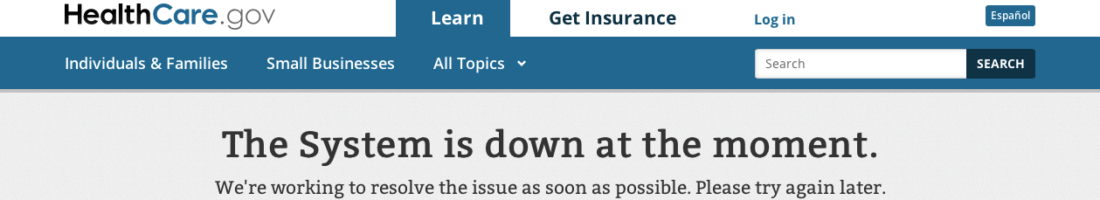

One relatively recent example of a product that failed to scale and had gigantic impact on a nation is the Obama Care Web platform. It freezes from 20K connections. Result: 80% of potential subscribers couldn't subscribe and USD 600M were lost. Some details here.

ii) Code robustness, again obvious but not easy to ensure. Every tech team has its strategy to ensure the code is readable, maintainable and optimized. This can be achieved by putting in place a certain number of measures and shared practices (automated testing, peer-review process, smart linters integrated to IDE, tools like SonarQube, mandatory comments, etc.). But this is not enough. A team that aspires to having robust code needs to define and track a set of indicators through visual management and run kaizen when necessary.

A couple of examples to illustrate the importance of code quality. Both coming us from France.

The Velib' app in Paris. For a long time Paris Mayor Anne Hidalgo praised the emergence of mobility tech and its pivotal importance in a metropolitan city. Only when the app was released, it had a budget overrun of 8 months, 600 stations were still inactive and, much worse, the app was giving wrong information on bikes availability. More details here.

Another example is the famous "prélèvement à la source" (employees no longer pay their taxes themselves, it is automatically debited from the source before it reaches their bank accounts) introduced by French President Emmanuel Macron. This initiative regardless of its economical relevance, didn't quite serve the reputation of the current government. 1 year delay vs. publicly communicated timeline, besides, while testing before production, turned out, many taxpayers were debited more than once.

iii) Monitoring errors and all sort of bad behavior: the tool we have been using at Nimble Ways and has proven to be quite simple and efficient is Sentry (https://sentry.io). I highly recommend it for almost every product. It has greatly helped us improve the products we developed for our clients by giving us (and our clients) the exact information we need and hence allowing us to spot errors (and improve user experience) and saving us countless hours of investigation. Be it for Web, mobile, data processing apps, it is quite helpful.

III. Marketing

In this section, I will not go through details as this topic is better covered by other specialized authors (for example Growth Hacker Marketing by Ryan Holiday)

However, companies do not need to have a digital marketing expert on-board in order to deliver on this front. A couple of aspects in my opinion need to be considered and worked on

1- The sales funnel need to be monitored. Several online tools offer this possibility (Google tag manager, analytics are the most famous examples). Besides, in order to optimize, some AB testing can be performed and fine tuned (e.g., using Google Optimize)

2- Search engine ranking is key. Google offers Lighthouse, a tool that lets you know how Google perceived your Web application. Each criteria is thoroughly explained and subdivided.

Any basic Web marketer should know (master) these two areas.

IV. Organization & governance

This is probably one of the areas where big companies can learn a lot from startups.

There has, in recent years, been a significant shift in many companies in the way they deliver software. They moved from waterfall classical framework to agile. That in itself is a huge step.

However, deeply rooted silo mode slowly creates a drift between tech teams and customer facing teams. Once the application is in production, it looks like "the job is done". So teams start splitting and going back to "normal" functioning in which each team manages its usual tasks and responsibilities separately.

But then, how, on earth, is the product supposed to scale? This is when a startup needs to arise.

The Product Owner becomes the startup CEO and surrounds himself with a delivery team consisting of a tech team, a marketing officer and a customer insight specialist. The MVP sponsor (in the company) becomes the investor. This team is the startup and its purpose is to scale the product.

In order for this team to succeed, it must be 100% dedicated to this job (except for the investor). This in itself is a great challenge for a large corporation as each member of the team probably has many other responsibilities. They must be detached for at least a year and fully dedicated to the product in order to have a chance.

2 key meetings need to take place in addition to pre-existing Scrum (or whatever framework) meetings that were set up during the MVP build phase.

1- Business steering ceremony: this is done weekly between the startup CEO and the delivery team. They go through all the KPI especially the Definition of Success KPI and customer feedback KPI.

The objective at the end of each meeting is to define the plan of action of the week for the team but also prioritize the product backlog.

This is done besides the usual agile meetings (review, planning) as in this one, business and success metrics are shown and discussed.

2- Board meeting: done monthly between the investor and the CEO. During these meetings, the CEO lays out the startup key metrics and his plan to scale including where he intends to put resources. The investor challenges his performance and his plans.

V. Customer insight

As described by Eric Ries, when you first launch an MVP, what matters most to you is the validated learning. What you don't want to do is to assume things. Every bit of the product must be put to test and validation from users (that's why agile is good as it lets you ship small bits regularly and makes it easier to identify what works and what doesn't).

However, it must be kept in mind that users will NOT tell you what to do, they will not tell you make your product this way and then I'll like it and use it. Users are like a black box that will only tell you if it likes what you have done or not. That's because most users respond emotionally (right side of the brain) to what they experience before they analyze anything (if they do).

So how do you extract insight from them? Simple, 3 sources of information :

Go-and-sees, a must, that I can't emphasize more. Genchi Genbutsu as our Japaneses lean masters from Toyota call it.

The key point here is, it doesn't have to be a big event! Large corps would hire "expert agencies" to perform this as they're scared they wouldn't know how to do it (but also to throw responsibility on external people in case this whole thing goes bust). In fact, it is very simple to perform a good go-and-see. The product team must do it on regular basis. All they have to do is bring a handful of users, put the product in front of them, observe and ask questions.

For the record, great US startups perform 10 to 20 go-and-sees per week. (source: Inspired by Marty Cagan)

Analytics, many KPI can be tracked, one that is of key importance is NPS. NPS has been the North Star followed by most of the big names of internet (Amazon, AirBnB, Uber) as it is a strong indication of the propensity to scale (through the mighty word-to-mouth mechanism).

Unfortunately, large corps new to digital technologies rarely know their NPS or occasionally hire a communication agency to compute the NPS through polls. The problem with that is that it can't track the NPS and hence can't make decisions on its products through this metric.

At Nimble Ways, we have been using Wootric (https://wootric.com) but other product do the trick as well (Delighted, Qualtrics).

Conclusion

To sum it up, it is likely that large corps and government entities that are not familiar with digital technologies and approaches will continue to experiment using a mix between what they hear from consultants (agile, agile at scale, time-to-market, micro-service architecture, etc.) and their legacy (both tech and mindset). The sooner they look in depth into how successful startups managed to scale, the better it will be on their bottom line.