TL;DR

- Help search engines find your website faster by adding a sitemap.

- Control your website crawlability, by disallowing unachieved and duplicated content from being indexed using a robots.txt.

- Add meta tags to help search engines understand your content.

- Maintain good performance

Without a doubt, being at the top of search results is key for most companies. The science behind this is called SEO, as Wikipedia defines it, it's the process of affecting the visibility of a website/webpage in a web search engine’s unpaid results. In other words it's all the things you have to do to get a high search engine ranking for free. Unfortunately this is not an exact science, but a couple decades were sufficient to crack some of its secrets.

In this articles, I will be using Gatsby, which is a great tool to create a personal or business website, to implement few technical optimizations that can play a big role in your search engine results!

⚠️ It's undeniable that the most important factor to get a good ranking is rich and original content. Let's assume that your website respects this factor and dive deeper into more technical factors.

🕷 Crawlability: Content Quality and Accessibility

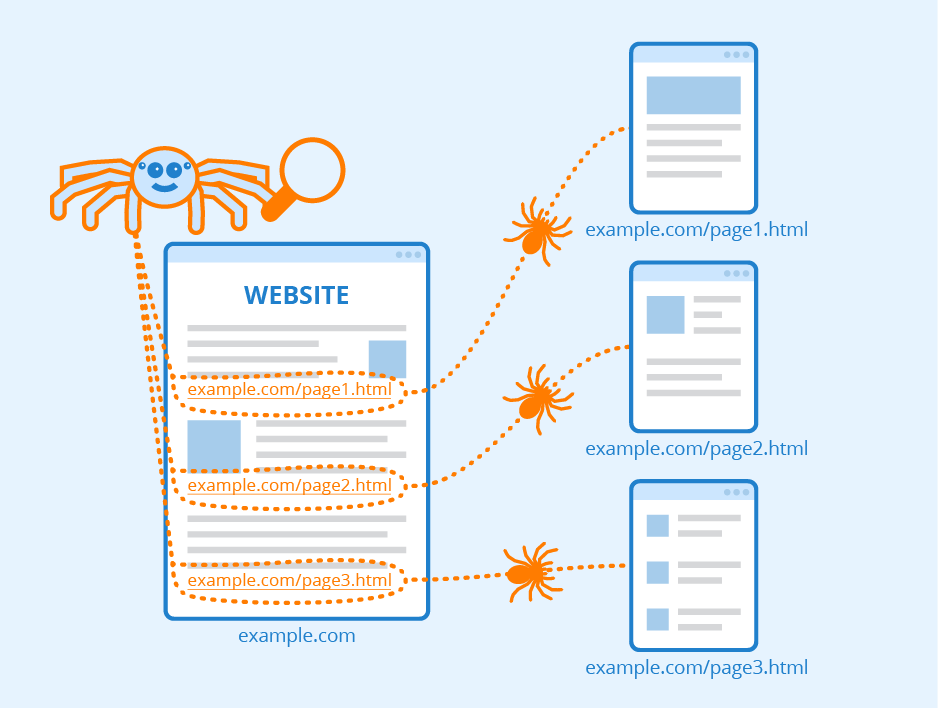

Crawlability is the first step on your SEO journey. Being crawlable means being discovered by search engines bots (spiders, crawlers). For example, Googlebots (the generic name for Google's web crawlers) start by finding a few web pages on your site, then analyze those pages to find new URLs and add them to a huge database of URLs called index, where information is extracted when a user is seeking the content on that webpage.

Everything pushed to internet is crawlable, but how fast can the crawling be? This is when a sitemap becomes handy.

A sitemap is a file where you provide information about the pages, videos, and other files on your site, and the relationships between them. Search engines like Google read this file to more intelligently crawl your site. A sitemap tells Google which pages and files you think are important in your site, and also provides valuable information about these files: for example, for pages, when the page was last updated, how often the page is changed, and any alternate language versions of a page - Google

Usually writing a sitemap is not an easy task, especially for a large website such as news websites, where there are many categories and articles to be indexed. Fortunately, Gatsby does that automatically for you, using the gatsby-plugin-sitemap:

All you need you to do is install it:

npm install gatsby-plugin-sitemap And add it to your gatsby-config file:

siteMetadata: {

siteUrl: `https://www.example.com`,

},

plugins: [`gatsby-plugin-sitemap`]Be careful to define the siteUrl to ensure that all URLs in the sitemap are correct.

You can also override the default options to exclude specific pages or groups of pages from your sitemap using the options parameter.

{

resolve: `gatsby-plugin-sitemap`,

options: {

exclude: ['/admin', '/confirmed'],

...

},

},

}Read More Here

As mentioned above, everything on internet is crawlable. It's only a matter of time. But sometimes this can be a double-edged sword because unachieved webpages get indexed and you may be sanctioned for “low-quality content”.

This can be solved by adding a Robots.txt file at the root of the website.

A robots.txt file tells search engine crawlers which pages or files the crawler can or can't request from your site. - Google

# Group 1

User-agent: Googlebot

# The user agent named "Googlebot" crawler should not crawl the folder

# http://example.com/nogooglebot/ or any subdirectories.

Disallow: /nogooglebot/

# Group 2

User-agent: *

# All other user agents can access the entire site. (This could have been

# omitted and the result would be the same, as full access is the assumption.)

Allow: /

Sitemap: http://www.example.com/sitemap.xml

In some cases, you may end up deploying different versions of your website. For example dev and prod, or Netlify "deploy previews" , those versions are crawled and indexed by the search engines which can cause "duplicated content" sanction. Adding different robots.txt manually to each version is definitely not a good solution. Once again this can be done using gatsby-plugin-robots-txt:

module.exports = {

plugins: [

{

resolve: 'gatsby-plugin-robots-txt',

options: {

host: 'https://www.example.com',

sitemap: 'https://www.example.com/sitemap.xml',

resolveEnv: () => process.env.GATSBY_ENV,

env: {

development: {

policy: [{ userAgent: '*', disallow: ['/'] }]

},

production: {

policy: [{ userAgent: '*', allow: '/' }]

}

}

}

}

]

};Read More Here

🔍 Keyword Research and Targeting

Sure, search engine crawlers can reach and understand your content even without any additional work. Recently due to content saturation, it's not longer only about how original your content is, but also how fast and easy crawlers can categorize it and index it.

Meta tags are invisible tags, that help search engines crawlers determine what your content is about.

The required meta tags for SEO are:

- html lang: defines the language of your website

- title: defines the title of your page, visible in search results but also in the tab of the browser

- meta name=description: defines the description of your website, visible in search results

- meta name="viewport": describes the behavior of your website on mobile, responsiveness is also key in SEO

Let's see how easy you can add those tags to your pages using react-helmet.

react-helmet lets you teleport code inside the head tags, so you can change your metadata dynamically .

For a Gatsby website it is recommended to use gatsby-plugin-react-helmet:

npm install gatsby-plugin-react-helmet react-helmetand then add it to your gatsby-config file:

plugins:[`gatsby-plugin-react-helmet`]import React from "react"

import { Helmet } from "react-helmet"

class Application extends React.Component {

render() {

return (

<div className="application">

<Helmet>

<meta charSet="utf-8" />

<title>My Title</title>

<link rel="canonical" href="http://mysite.com/example" />

</Helmet>

</div>

)

}

}Read More Here

⚡ Great User Experience

SEO keeps changing as the search engine algorithms get more complicated and more demanding. With tons of content pushed every day to internet, and a very tough competition in any possible niche, search engines have thought of new ways to measure domains usefulness and quality, so they started monitoring how users interact with URLs contained in the index.

If your site has high user engagement ratings, then your chances of appearing at the top of the search results are improved.

In July 2018, Google announced a new ranking factor for site speed, calling the algorithm update the “Speed Update”. Google will possibly rank pages higher in the search results for faster loading times, however, the intent of the search query is still very relevant and a slower page can rank higher if the content is more relevant - Gatsby doc

I guess there is no need to explain that performance is key in user experience.

With Gatsby, performance is often guaranteed, with its built-in performance optimizations, such as rendering to static files, progressive image loading, and the PRPL pattern—to help your site be lightning-fast by default.

Here is few plugins to improve your website performance, so you can surpass your Gatsby competitors 😉

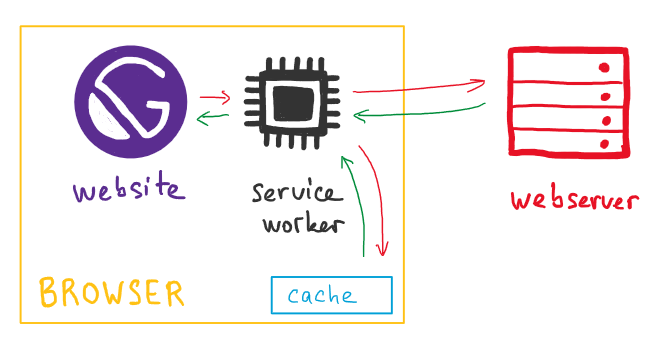

gatsby-plugin-offline:

The goal of this plugin is to make your website more immune to bad networks, it create a service worker for the site, which is a script that your browser runs in the background, to cache the assets of the website and make them available when the users' device has a poor network connection.

Read More Here

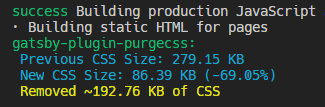

Gatsby-plugin-purge-css:

When you are building a website, you might decide to include a CSS framework like Material-UI, Tailwind CSS.... Usually you will end up using a small part of that framework, and a lot of unused CSS styles will be imported.

This plugin analyzes your content and your CSS files, and removes all unused CSS in the content.

Read More Here

Gatsby-plugin-preload-fonts:

This plugin preloads all required fonts to reduce the first significant painting time and avoid flashing and jumping fonts. It works really well with services like Google Fonts that link to stylesheets that in turn link to font files.

Read More Here

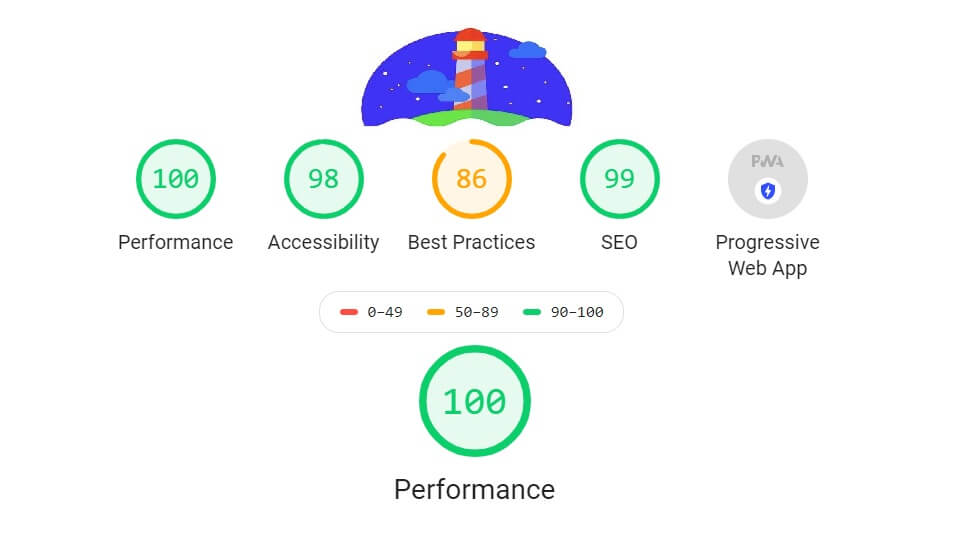

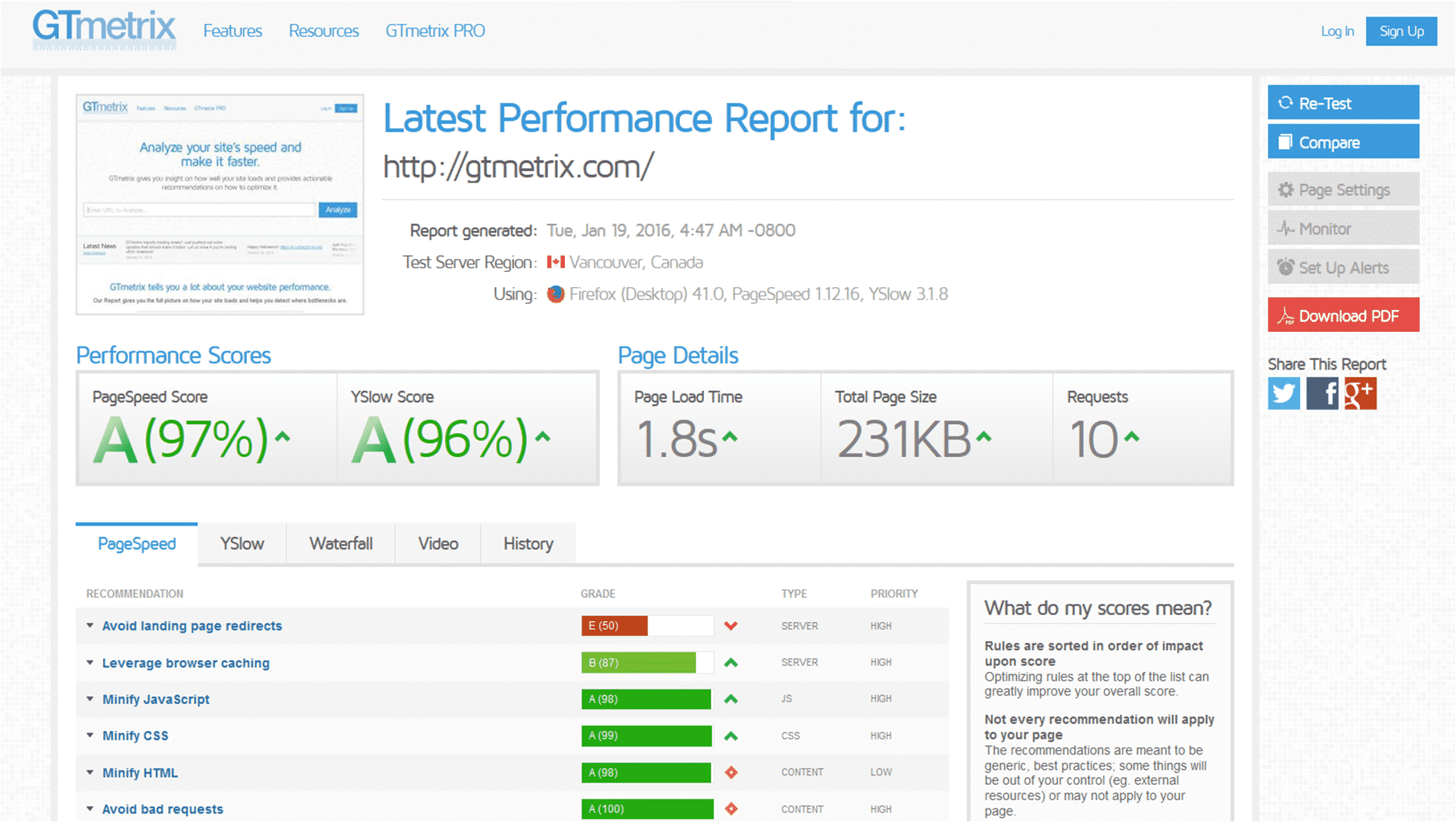

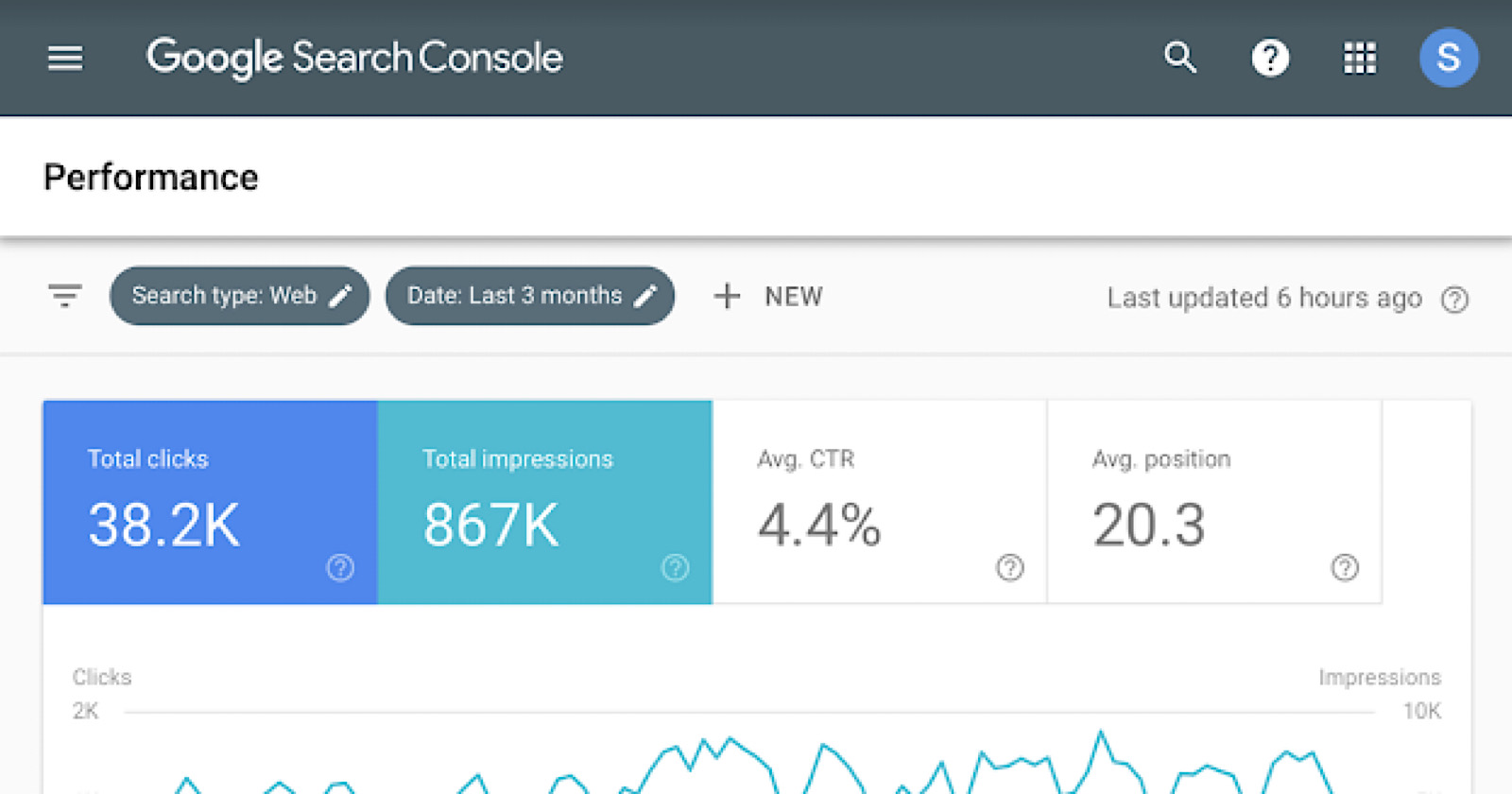

Before you leave I want to show you some tools to track your SEO results:

Lighthouse is a great tool to measure your website quality, It has audits for performance, accessibility, progressive web apps, SEO and more, you can run it using Chrome DevTools, or using the Lighthouse CI.

GTMetrix is a website performance analytics tool, which provide you with a list of actionable recommendations to improve it.

Search Console is a tool that helps you measure your site's Search traffic and performance, fix issues, and make your site shine in Google Search results.

🏁 Wrapping up

So far, you found out how search engines crawl your website, and how to help them in the process by adding a sitemap and robots.txt files. We also discussed the importance of meta-tags, and how easily you can add them to your website using react-helmet. Finally, we took a look at a list of gatsby plugins that will help you maintain a good performance which means a good user experience. Sure, there is far more to discuss in the way of SEO, but with what you learned above plus good content, and a bit of luck you will end up at the top of search results.